Biography

Welcome to my personal webpage! I am a third-year PhD student at the Robotics, Perception and Real Time (RoPeRT) group at the University of Zaragoza (Unizar) under the supervision of Prof. Juan D. Tardós. Previously I studied a Bachelors’s degree in Computer Science and a Master’s degree in Biomedical Engineering, both at Unizar where I started my career as a Computer Vision researcher.

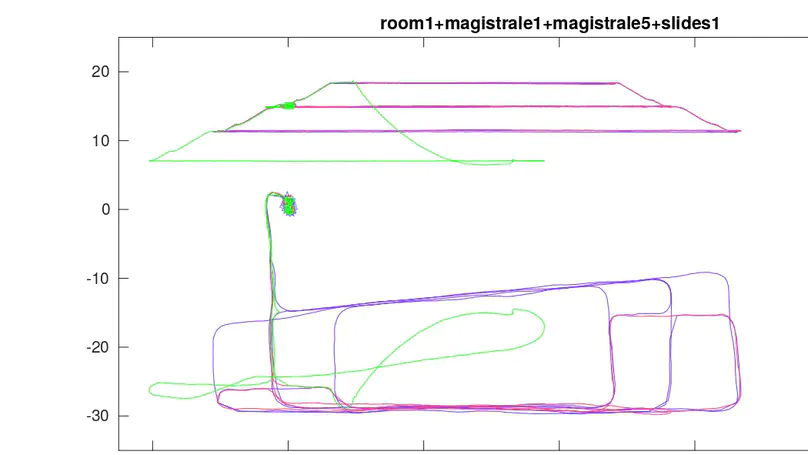

My work revolves how computers perceive and understand their surroundings by means of Visual Simultaneous Localization and Mapping techniques (V-SLAM). I am especially interested and motivated by those challenging situations that impairs the use of these technologies like deformable V-SLAM which has direct application to many other fields like Minimal Intrusive Surgery.

Download my resumé.

- Computer Vision

- Robotics

- Deep Learning

-

PhD in Computer Vision, 2023

University of Zaragoza

-

MEng in Biomedical Engineering, 2019

University of Zaragoza

-

BSc in Computer Engineering, 2018

University of Zaragoza

Experience

Researcher in Computer Vision:

- Rigid Visual SLAM for movile agents

- Deformable Visual SLAM for medical sequences

Assistant professor:

- Introduction to Machine Learning

- Simultaneous Localization and Mapping

Featured Publications

Monocular SLAM in deformable scenes will open the way to multiple medical applications like computer-assisted navigation in endoscopy, automatic drug delivery or autonomous robotic surgery. In this paper we propose a novel method to simultaneously track the camera pose and the 3D scene deformation, without any assumption about environment topology or shape. The method uses an illumination-invariant photometric method to track image features and estimates camera motion and deformation combining reprojection error with spatial and temporal regularization of deformations. Our results in simulated colonoscopies show the method’s accuracy and robustness in complex scenes under increasing levels of deformation. Our qualitative results in human colonoscopies from Endomapper dataset show that the method is able to successfully cope with the challenges of real endoscopies like deformations, low texture and strong illumination changes. We also compare with previous tracking methods in simpler scenarios from Hamlyn dataset where we obtain competitive performance, without needing any topological assumption.

This paper presents ORB-SLAM3, the first system able to perform visual, visual-inertial and multi-map SLAM with monocular, stereo and RGB-D cameras, using pin-hole and fisheye lens models. The first main novelty is a feature-based tightly-integrated visual-inertial SLAM system that fully relies on Maximum-a-Posteriori (MAP) estimation, even during the IMU initialization phase. The result is a system that operates robustly in real-time, in small and large, indoor and outdoor environments, and is 2 to 5 times more accurate than previous approaches. The second main novelty is a multiple map system that relies on a new place recognition method with improved recall. Thanks to it, ORB-SLAM3 is able to survive to long periods of poor visual information’:’’ when it gets lost, it starts a new map that will be seamlessly merged with previous maps when revisiting mapped areas. Compared with visual odometry systems that only use information from the last few seconds, ORB-SLAM3 is the first system able to reuse in all the algorithm stages all previous information. This allows to include in bundle adjustment co-visible keyframes, that provide high parallax observations boosting accuracy, even if they are widely separated in time or if they come from a previous mapping session. Our experiments show that, in all sensor configurations, ORB-SLAM3 is as robust as the best systems available in the literature, and significantly more accurate. Notably, our stereo-inertial SLAM achieves an average accuracy of 3.6 cm on the EuRoC drone and 9 mm under quick hand-held motions in the room of TUM-VI dataset, a setting representative of AR/VR scenarios. For the benefit of the community we make public the source code.

Recent Publications

Contact

- jjgomez@unizar.es

- +34 711 75 17 75

- 1 Maria de Luna, Zaragoza, Aragón 50018

- Enter Ada Byron Building, first floor, lab 1.08